Fu-Yun Wang (IPA: [fu˧˥ yn˧˥ wɑŋ]) is a third-year Ph.D. candidate at MMLab@CUHK. He works on post-training. Currently vibing with world model and agentic stuff.

Job market 2027. Looking for opportunities (industry or postdoc) to work on interesting projects. Always happy to chat early.

Recent Work New

Method

PromptRL jointly trains language models and flow-matching models in a unified RL loop for text-to-image generation, using LMs as adaptive prompt refiners.

- Unified RL Training: Joint optimization of both LM and FM in a single loop

- Exploration Collapse Solution: Overcomes insufficient diversity through adaptive prompting

- Test-time Adaptation: Adaptive prompt refinement at inference

Method

ConsistencySolver is a lightweight, learnable high-order ODE solver derived from general linear multistep methods, optimized via Reinforcement Learning (PPO). It adapts integration coefficients to each sampling step, improving both preview fidelity and consistency with the full-step output—without modifying the base diffusion model.

- Diffusion Preview Paradigm: Fast few-step preview → user evaluation → full-step refinement only when satisfied

- Learnable ODE Solver: Adaptive multistep coefficients predicted by a lightweight MLP, trained end-to-end via RL

- Preview Consistency: Preserves the deterministic PF-ODE mapping so preview closely matches the final output

- FID on-par with 47% fewer steps vs. Multistep DPM-Solver; outperforms distillation baselines

Research Summary

Interactive tree diagram of my research. Click nodes to expand/collapse; click paper titles to visit links.

Research Directions

Internship Experience

ByteDance Seed Current

Research Intern · 2025.10 - Present

Video Generation, Multimodal Models

Mentor: Haoqi Fan

Reve Inc

Research Intern · 2025.6 - 2025.11

Multimodal LMs, Diffusion Models, RL

Supervised by: Dr. Han Zhang

Google DeepMind

Research Intern · 2025.2 - 2025.5

Diffusion Distillation, RL

Supervised by: Dr. Long Zhao, Dr. Ting Liu, Dr. Hao Zhou, Dr. LiangZhe Yuan

Collaborated with Prof. Bohyung Han, Prof. Boqing Gong

Avolution AI Acquired by MiniMax

Research Collaboration · 2023.10 - 2024.10

Video Diffusion, Distillation

Collaborated with: Dr. Zhaoyang Huang, Dr. Xiaoyu Shi, Weikang Bian

Tencent AI Lab

Research Intern · 2022.6 - 2022.12

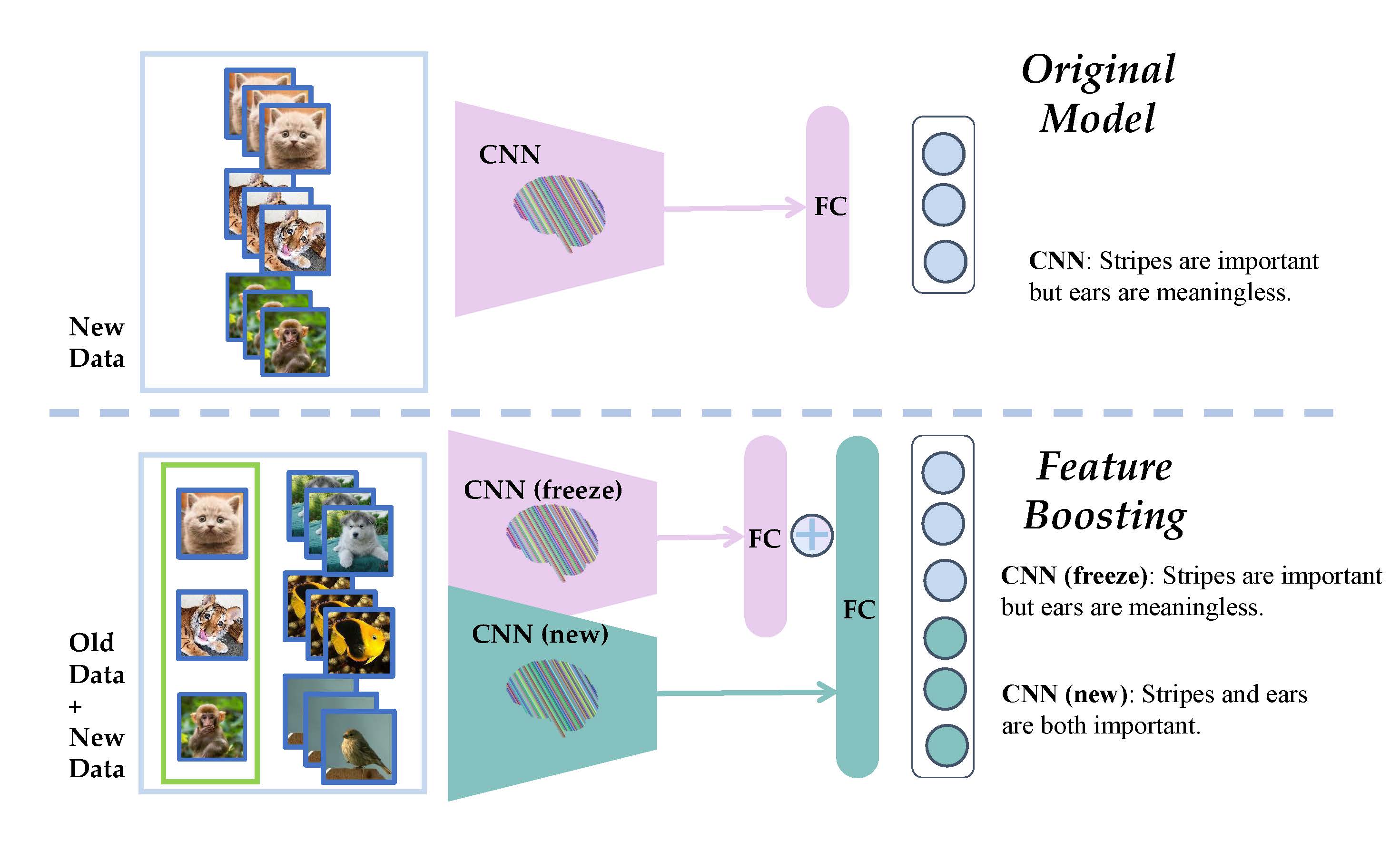

Class-Incremental Learning

Supervised by: Dr. Liu Liu, Prof. Yatao Bian

Education

The Chinese University of Hong Kong

Ph.D. in Engineering · 2023 - Present

Supervisor: Prof. Hongsheng Li & Prof. Xiaogang Wang

Nanjing University

B.Eng. in AI (Rank 2/88) · 2019 - 2023

Supervisor: Prof. Han-Jia Ye & Prof. Da-Wei Zhou

Selected Publications

Categorized by theme. Full list on Google Scholar.

Diffusion Post-Training: Acceleration & Reinforcement Learning

Generative Vision Applications

Class-Incremental Learning

Talks

Awards & Honor

- 2025 CVPR 2025 Outstanding Reviewer

- 2023 HKPFS (Hong Kong PhD Fellowship Scheme)

- 2023 Outstanding Graduate of Nanjing University

- 2023 Outstanding Undergraduate Thesis of NJU

- 2022 Sensetime Scholarship

- 2022 Huawei Scholarship

- 2021 National Scholarship

Academic Services

Reviewer for:

| Venue | Years |

|---|---|

| TPAMI | — |

| TCSVT / PRL | — |

| CVPR | 2023–2025 |

| NeurIPS | 2023, 2025 |

| ICLR | 2024, 2025 |

| ICML | 2024, 2025 |

| ECCV / ICCV | 2024 / 2025 |

| BMVC / SIGGRAPH Asia | 2024 / 2025 |