Fu-Yun Wang (Pronounced as "Foo-Yoon Wahng" IPA: [fu˧˥ yn˧˥ wɑŋ]) is a second-year Ph.D. Candidate of MMLab@CUHK.

My research interests now focus on scalable post-training techniques for diffusion models and unified multimodal models.

I plan to enter the job market in 2027 and am open to overseas opportunities in industrial generative AI jobs, and postdoctoral roles. Feel free to contact me early to discuss potential collaborations.

Research Summary

Below is an interactive tree diagram categorizing my research work by direction. Click nodes to expand/collapse, and click paper titles to visit links.

Research Directions

Internship Experience

Tencent AI Lab

Research Intern | 2022.6 - 2022.12

Worked on Class-Incremental Learning.

Supervised by: Dr. Liu Liu

Collaborated with Dr. Yatao Bian for instruction and discussions

Avolution AI (Accquired by MiniMax)

Research Collaboration | 2023.10 - 2024.10

Worked on Video Diffusion Models, Diffusion Distillation.

Supervised by: Dr. Zhaoyang Huang

Collaborated with Dr. Xiaoyu Shi and Weikang Bian for instruction and discussions

Google DeepMind

Research Intern | 2025.2 - 2025.5

Focused on Diffusion Distillation, Reinforcement Learning.

Supervised by: Dr. Long Zhao, Dr. Ting Liu, Dr. Hao Zhou and Dr. LiangZhe Yuan

Collaborated with Prof. Bohyung Han, Prof. Boqing Gong for instruction and discussions.

Reve Art

Research Intern | 2025.6 - Present

Focused on Multimodal Language Models, Diffusion Moldes, Reinforcement Learning.

Supervised by: Dr. Han Zhang

Education

The Chinese University of Hong Kong (CUHK)

Ph.D. in Engineering | 2023 - Present

Supervisor: Professor Hongsheng Li and Professor Xiaogang Wang

Nanjing University

B.Eng. in Artificial Intelligence (RANK 2/88) | 2019 - 2023

Supervisor: Professor Han-Jia Ye and Professor Da-Wei Zhou (LAMDA Group)

Selected Publications

Below are some of my selected publications, categorized by theme. For a complete list, please visit my Google Scholar profile.

Diffusion Post-Training: Acceleration & Reinforcement Learning

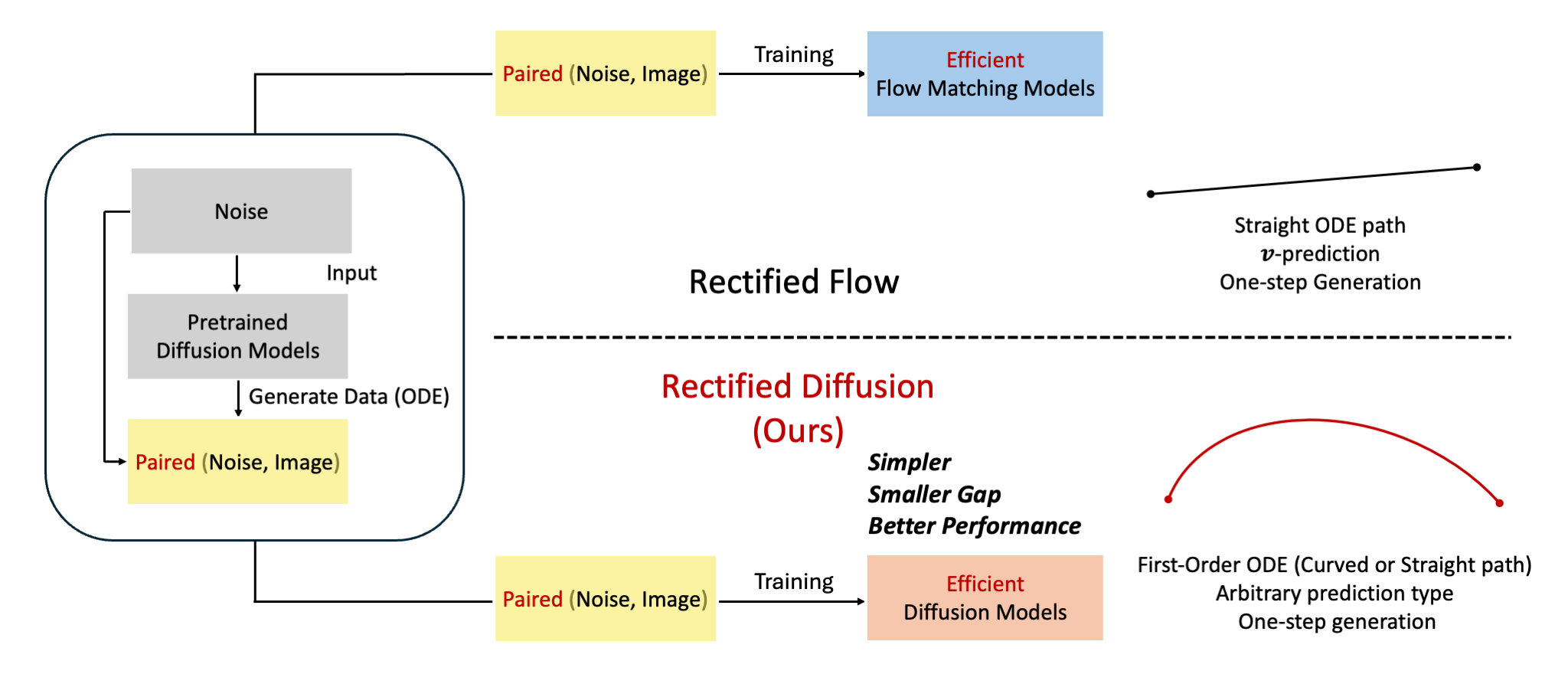

Rectified Diffusion: Straightness Is Not Your Need in Rectified Flow

Rectified Diffusion: Straightness Is Not Your Need in Rectified Flow

Fu-Yun Wang,

Ling Yang,

Zhaoyang Huang,

Mengdi Wang,

Hongsheng Li

Thirteenth International Conference on Learning Representations. ICLR 2025.

We conducted an in-depth and meticulous theoretical analysis and empirical validation of flow matching, rectified flow, and the rectification operation. We demonstrated that the rectification operation is also applicable to general diffusion models, and that flow matching is fundamentally no different from the traditional noise addition methods in DDPM. Our related blog post on ZHIHU garnered over 10k views and approximately 400 likes.

arXiv •

GitHub •

Poster

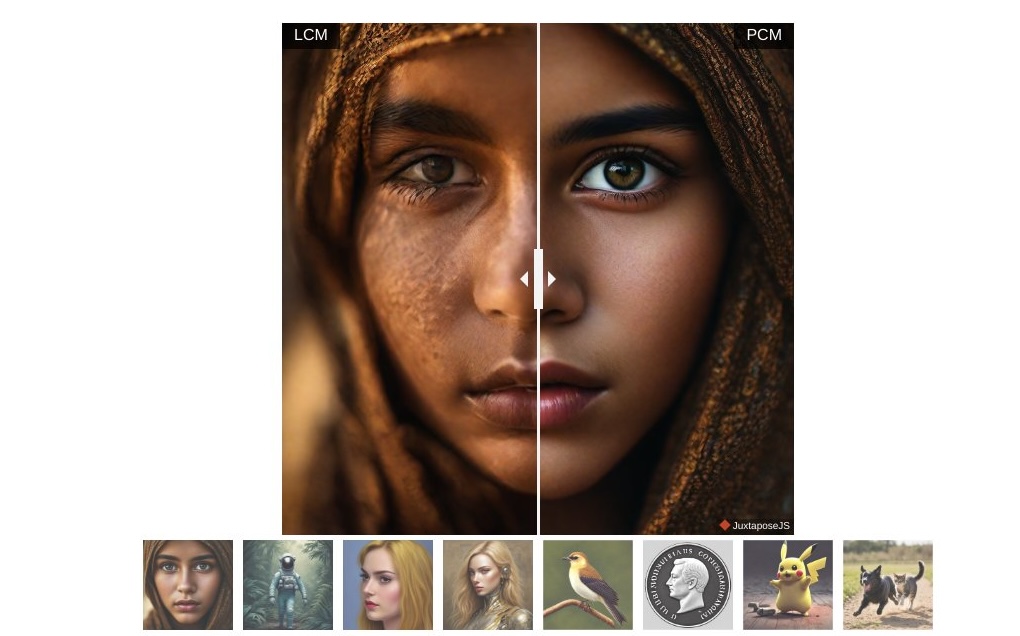

Phased Consistency Model

Phased Consistency Model

Fu-Yun Wang,

Zhaoyang Huang,

Alexander William Bergman,

Dazhong Shen,

Peng Gao,

Michael Lingelbach,

Keqiang Sun,

Weikang Bian,

Guanglu Song,

Yu Liu,

Xiaogang Wang,

Hongsheng Li

Conference on Neural Information Processing Systems. NeurIPS 2024.

We validated and enhanced the effectiveness of consistency models for text-to-image and text-to-video generation. Our method has been adopted by the FastVideo project, successfully accelerating SoTA video diffusion models including HunyuanVideo and WAN.

Project Page •

Github •

Paper •

Poster

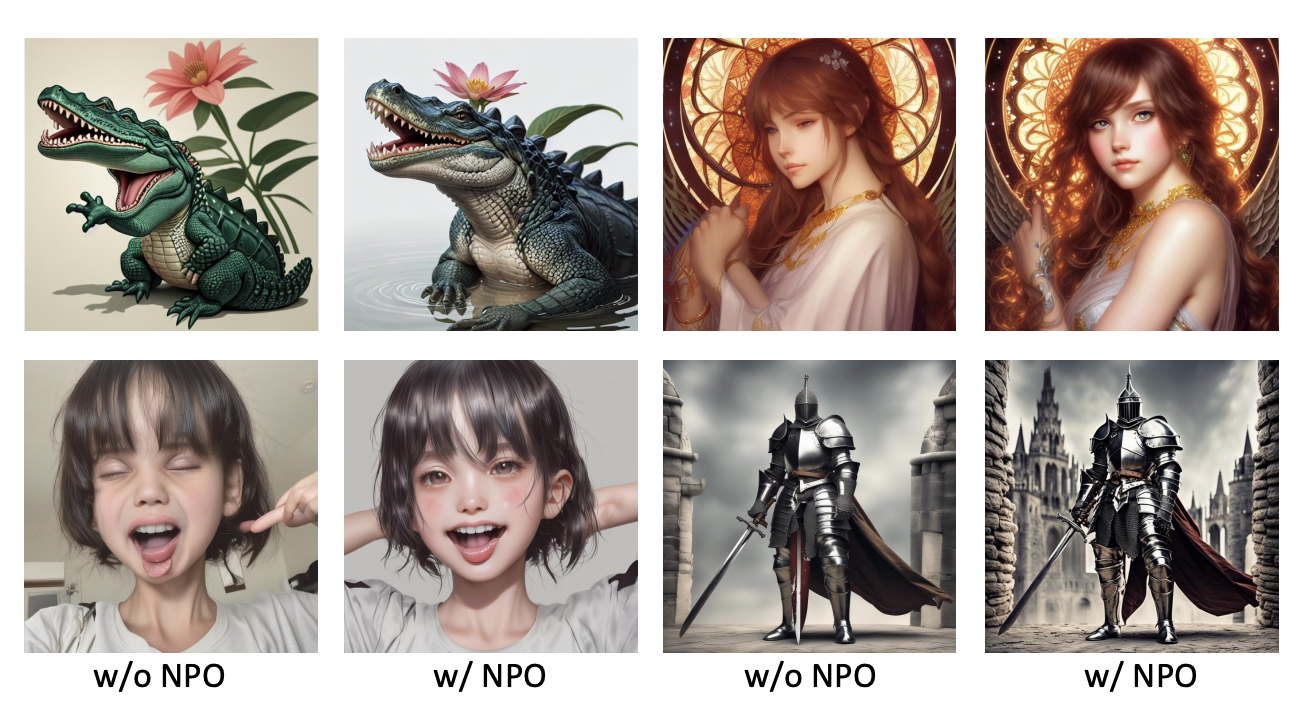

Diffusion-NPO: Negative Preference Optimization for Better Preference Aligned Generation of Diffusion Models

Diffusion-NPO: Negative Preference Optimization for Better Preference Aligned Generation of Diffusion Models

Fu-Yun Wang,

Yunhao Shui,

Jingtan Piao,

Keqiang Sun,

Hongsheng Li

Thirteenth International Conference on Learning Representations. ICLR 2025.

We proposed a general, simple yet effective method for strengthened diffusion preference optimization, improving the alignment of generated outputs with user preferences.

Paper •

Poster •

Github

Generative Vision Applications

Xiaoyu Shi*,

Zhaoyang Huang*,

Fu-Yun Wang*,

Weikang Bian*,

Dasong Li,

Yi Zhang,

Manyuan Zhang,

Kachun Cheung,

Simon See,

Hongwei Qin,

Jifeng Dai,

Hongsheng Li

Special Interest Group on GRAPHics and Interactive Techniques.SIGGRAPH 2024.

SIGGRAPH 2024 Technical Papers Trailer

Project Page •

GitHub •

arXiv

Fu-Yun Wang,

Zhaoyang Huang,

Qiang Ma,

Xudong Lu,

Weikang Bian,

Yijin Li,

Yu Liu,

Hongsheng Li

European Conference on Computer Vision. ECCV 2024.

ECCV 2024 Oral Presentation

Project Page •

Paper

Class-Incremental Learning

PyCIL: A Python Toolbox for Class-Incremental Learning

PyCIL: A Python Toolbox for Class-Incremental Learning

Da-Wei Zhou*,

Fu-Yun Wang*,

Han-Jia Ye,

De-Chuan Zhan

SCIENCE CHINA Information Sciences. SCIS.

PyCIL stands out as a comprehensive and user-friendly Python toolbox for Class-Incremental Learning. Boasting nearly 1000 stars on GitHub, it is currently the most widely collected CIL toolkit, adopted by researchers worldwide. It provides a standardized framework for implementing and evaluating various CIL algorithms, fostering reproducible research and accelerating advancements in the field.

Github •

arXiv •

Media •

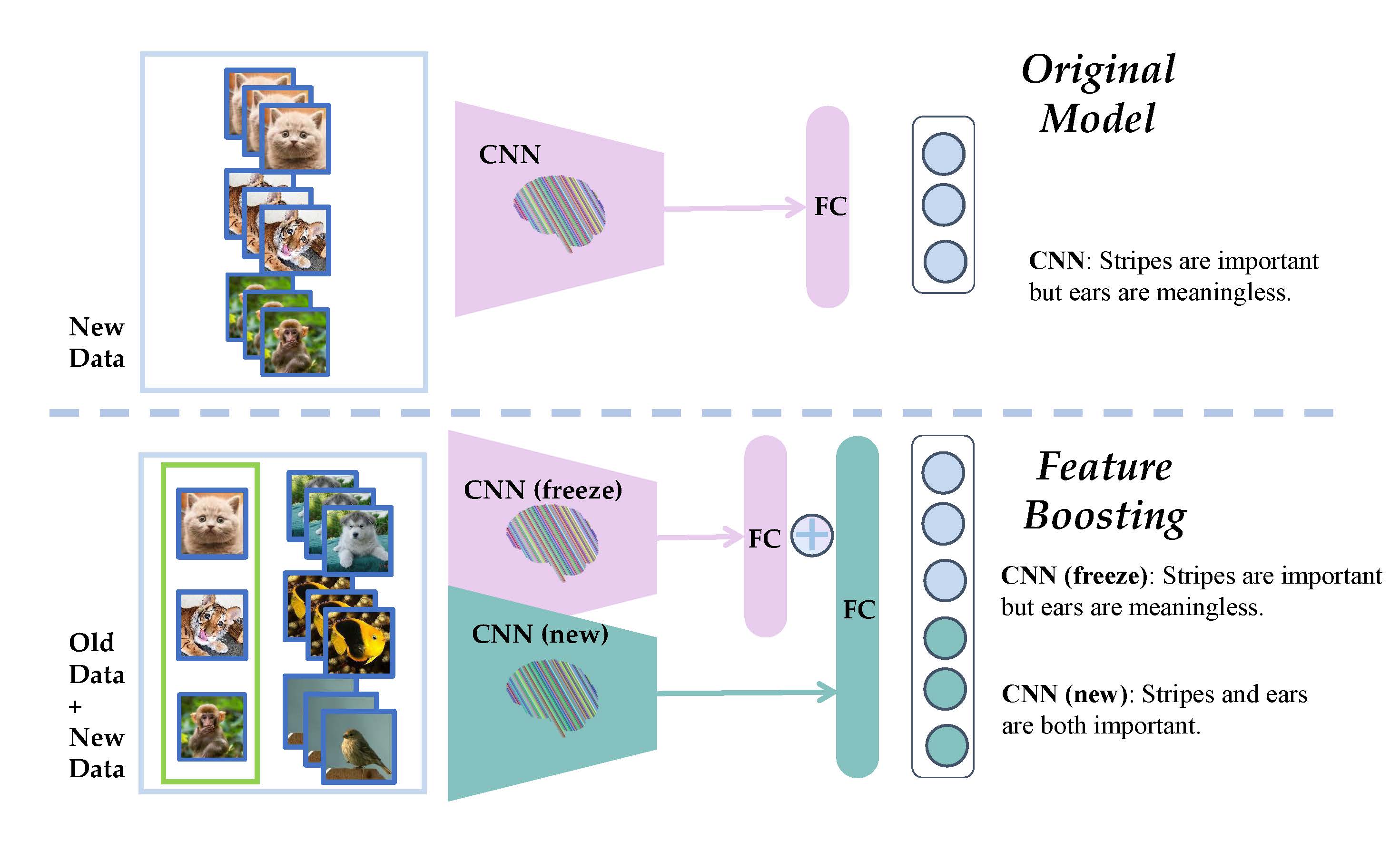

FOSTER: Feature Boosting and Compression for Class-Incremental Learning

FOSTER: Feature Boosting and Compression for Class-Incremental Learning

Fu-Yun Wang,

Da-Wei Zhou,

Han-Jia Ye,

De-Chuan Zhan

European Conference on Computer Vision. ECCV 2022.

FOSTER introduces a novel approach to Class-Incremental Learning by combining feature boosting and compression strategies. This method effectively mitigates catastrophic forgetting while promoting the learning of new classes, showcasing robust performance in dynamic learning environments.

Github •

arXiv •

Full Publications

Complete List of Publications

-

Self-NPO: Negative Preference Optimization of Diffusion Models by Simply Learning from Itself without Explicit Preference Annotations

Fu-Yun Wang, Keqiang Sun, Yao Teng, Xihui Liu, Jiaming Song, Hongsheng Li

AAAI 2026 -

Speculative Jacobi-Denoising Decoding for Accelerating Autoregressive Text-to-image Generation

Yao Teng, Fu-Yun Wang, Xian Liu, Zhekai Chen, Han Shi, Yu Wang, Zhenguo Li, Weiyang Liu, Difan Zou, Xihui Liu

NeurIPS 2025 -

Unleashing Vecset Diffusion Model for Fast Shape Generation

Zeqiang Lai, Yunfei Zhao, Zibo Zhao, Haolin Liu, Fuyun Wang, Huiwen Shi, Xianghui Yang, Qingxiang Lin, Jingwei Huang, Yuhong Liu, Jie Jiang, Chunchao Guo, Xiangyu Yue

ICCV 2025 (Highlight) -

Stable Consistency Tuning: Understanding and Improving Consistency Models

Fu-Yun Wang, Zhengyang Geng, Hongsheng Li

ICLR 2025 Workshop (Deep Generative Model in Machine Learning: Theory, Principle and Efficacy)

arXiv • GitHub -

Rectified Diffusion: Straightness Is Not Your Need in Rectified Flow

Fu-Yun Wang, Ling Yang, Zhaoyang Huang, Mengdi Wang, Hongsheng Li

ICLR 2025

arXiv • GitHub • Poster -

Diffusion-NPO: Negative Preference Optimization for Better Preference Aligned Generation of Diffusion Models

Fu-Yun Wang, Yunhao Shui, Jingtan Piao, Keqiang Sun, Hongsheng Li

ICLR 2025

Paper • Poster • GitHub -

InstantPortrait: One-Step Portrait Editing via Diffusion Multi-Objective Distillation

Zhixin Lai, Keqiang Sun, Fu-Yun Wang, Dhritiman Sagar, Erli Ding

ICLR 2025

Paper -

Phased Consistency Model

Fu-Yun Wang, Zhaoyang Huang, Alexander William Bergman, Dazhong Shen, Peng Gao, Michael Lingelbach, Keqiang Sun, Weikang Bian, Guanglu Song, Yu Liu, Xiaogang Wang, Hongsheng Li

NeurIPS 2024

Project Page • GitHub • Paper • Poster -

OSV: One Step is Enough for High-Quality Image to Video Generation

Xiaofeng Mao*, Zhengkai Jiang*, Fu-Yun Wang*, Wenbing Zhu, Jiangning Zhang, Hao Chen, Mingmin Chi, Yabiao Wang

CVPR 2025

arXiv -

GS-DiT: Advancing Video Generation with Pseudo 4D Gaussian Fields through Efficient Dense 3D Point Tracking

Weikang Bian, Zhaoyang Huang, Xiaoyu Shi, Yijin Li, Fu-Yun Wang, Hongsheng Li

CVPR 2025

arXiv • Project Page -

AnimateLCM: Computation-Efficient Personalized Style Video Generation without Personalized Video Data

Fu-Yun Wang, Zhaoyang Huang, Weikang Bian, Xiaoyu Shi, Keqiang Sun, Guanglu Song, Yu Liu, Hongsheng Li

SIGGRAPH Asia 2024 Technical Communications

Project Page • GitHub • arXiv -

ZoLA: Zero-Shot Creative Long Animation Generation with Short Video Model

Fu-Yun Wang, Zhaoyang Huang, Qiang Ma, Xudong Lu, Weikang Bian, Yijin Li, Yu Liu, Hongsheng Li

ECCV 2024 (Oral Presentation)

Project Page • Paper -

Be-Your-Outpainter: Mastering Video Outpainting through Input-Specific Adaptation

Fu-Yun Wang, Xiaoshi Wu, Zhaoyang Huang, Xiaoyu Shi, Dazhong Shen, Guanglu Song, Yu Liu, Hongsheng Li

ECCV 2024

Project Page • Paper • arXiv • GitHub -

Motion-I2V: Consistent and Controllable Image-to-Video Generation with Explicit Motion Modeling

Xiaoyu Shi*, Zhaoyang Huang*, Fu-Yun Wang*, Weikang Bian*, Dasong Li, Yi Zhang, Manyuan Zhang, Kachun Cheung, Simon See, Hongwei Qin, Jifeng Dai, Hongsheng Li

SIGGRAPH 2024

Project Page • GitHub • arXiv -

Rethinking the Spatial Inconsistency in Classifier-Free Diffusion Guidance

Dazhong Shen, Guanglu Song, Zeyue Xue, Fu-Yun Wang, Yu Liu

CVPR 2024

arXiv • GitHub -

FOSTER: Feature Boosting and Compression for Class-Incremental Learning

Fu-Yun Wang, Da-Wei Zhou, Han-Jia Ye, De-Chuan Zhan

ECCV 2022

GitHub • arXiv -

BEEF: Bi-Compatible Class-Incremental via Energy-Based Expansion and Fusion

Fu-Yun Wang, Da-Wei Zhou, Liu Liu, Han-Jia Ye, Yatao Bian, De-Chuan Zhan, Peilin Zhao

ICLR 2023

GitHub • Paper -

FACT: Forward Compatible Few-Shot Class-Incremental Learning

Da-Wei Zhou, Fu-Yun Wang, Han-Jia Ye, Liang Ma, Shiliang Pu, De-Chuan Zhan

CVPR 2022

GitHub • arXiv -

PyCIL: A Python Toolbox for Class-Incremental Learning

Da-Wei Zhou*, Fu-Yun Wang*, Han-Jia Ye, De-Chuan Zhan

SCIENCE CHINA Information Sciences

GitHub • arXiv • Media -

Diffusion-Sharpening: Fine-tuning Diffusion Models with Denoising Trajectory Sharpening

Ye Tian, Ling Yang, Fu-Yun Wang, Xinchen Zhang, Yunhai Tong, Mengdi Wang, Bin Cui

Preprint -

Lumina-Next: Making Lumina-T2X Stronger and Faster with Next-DiT

Le Zhuo, Ruoyi Du, Han Xiao, Yangguang Li, Dongyang Liu, Rongjie Huang, Wenze Liu, Xiangyang Zhu, Fu-Yun Wang, Zhanyu Ma, Xu Luo, Zehan Wang, Kaipeng Zhang, Lirui Zhao, Si Liu, Xiangyu Yue, Wanli Ouyang, Yu Qiao, Hongsheng Li, Peng Gao

NeurIPS 2024 -

Trans4D: Realistic Geometry-Aware Transition for Compositional Text-to-4D Synthesis

Bohan Zeng, Ling Yang, Siyu Li, Jiaming Liu, Zixiang Zhang, Juanxi Tian, Kaixin Zhu, Yongzhen Guo, Fu-Yun Wang, Minkai Xu, Stefano Ermon, Wentao Zhang

Preprint

Talks

Awards & Honor

- 2025 CVPR 2025 Outstanding Reviewer

- 2023 HKPFS (Hong Kong PhD Fellowship Scheme)

- 2023 Outstanding Graduate of Nanjing University

- 2023 Outstanding Undergraduate Thesis of Nanjing University

- 2022 Sensetime Scholarship

- 2022 Huawei Scholarship

- 2021 National Scholarship

Services

| Journal/Conference | Years |

|---|---|

| IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI) | - |

| IEEE Transactions on Circuits and Systems for Video Technology (TCSVT) | - |

| Pattern Recognition Letters (PRL) | - |

| Conference on Computer Vision and Pattern Recognition (CVPR) | 2023, 2024, 2025 |

| Neural Information Processing Systems (NeurIPS) | 2023, 2025 |

| International Conference on Learning Representations (ICLR) | 2024, 2025 |

| International Conference on Machine Learning (ICML) | 2024, 2025 |

| European Conference on Computer Vision (ECCV) | 2024 |

| International Conference on Computer Vision (ICCV) | 2025 |

| British Machine Vision Conference (BMVC) | 2024 |

| SIGGRAPH Asia | 2025 |